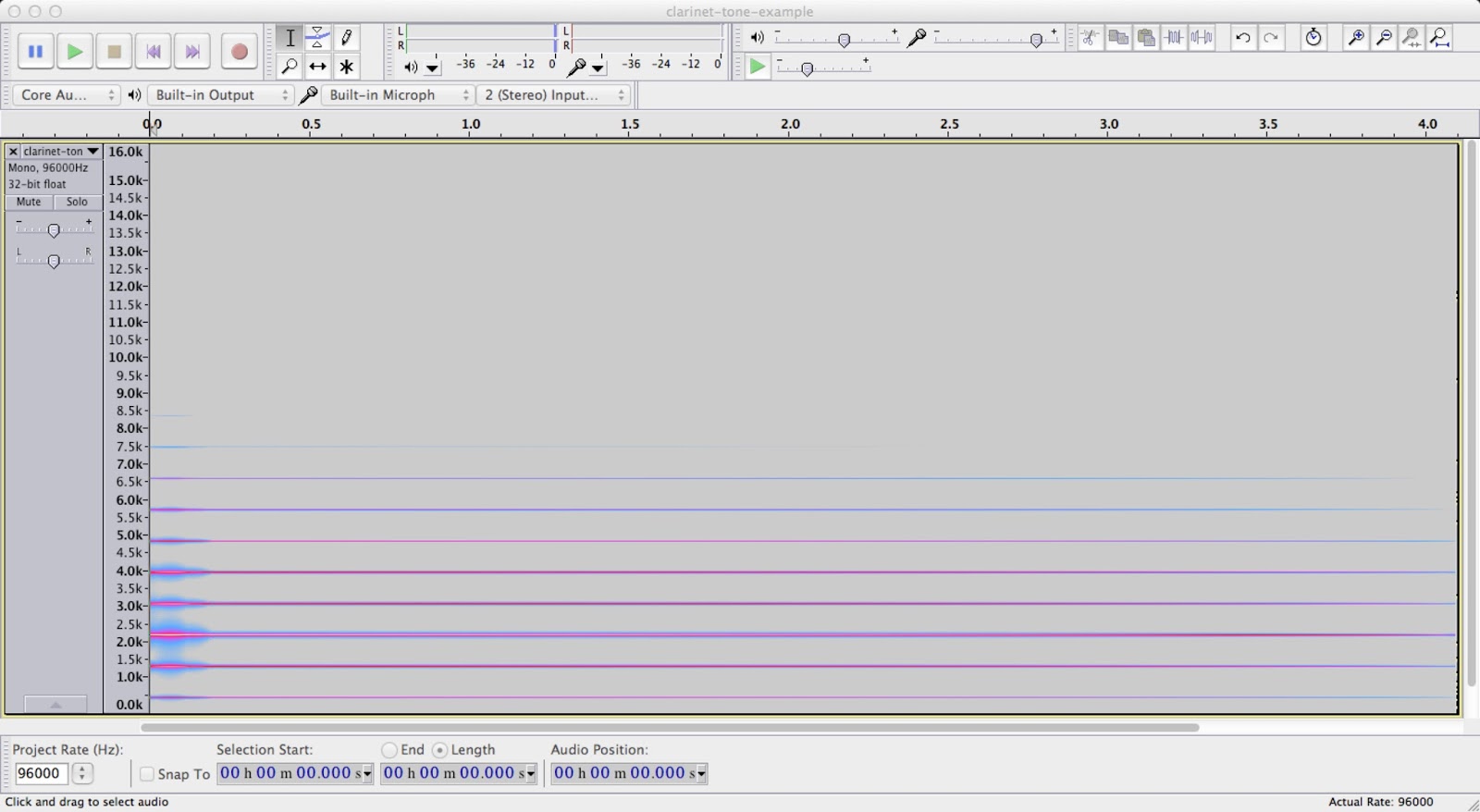

Here is another patch which I used to render the Moonlight Sonata.

This piece uses a similar percussive string sound to some of my other pieces. It has some very vague similarity to a piano (hence the silly name solar-piano) but in reality it sounds nothing like a piano. What it does have is a complex release. The signal is frequency and amplitude modulated by slow frequency noise. Whilst this produces a potentially pleasing and somewhat wistful sound for single notes, it effects on harmonies is to pain a complex sound scape just from the overlap of signals.

Because these sound generator is therefore good at filling space between the attacks of notes, it allowed me to slow the music down around 5:1. A hand made reverberation impulse response then helped further by smoothing everything into a near realistic but overly wet space.

The patch is below this video:

[

Reverberator

============

]

{

?signal Magnitude !mag

(>signal,?grain-length Silence)Concatenate !signal

(

(?mag,0)Eq,

{

},

{

(>signal,?grain-length Silence)Concatenate !signal

>signal FrequencyDomain !signal

(>convol,>signal)CrossMultiply !signal

>signal TimeDomain !signal

?signal Magnitude !newMag

(>signal,(>mag,>newMag)/)NumericVolume !signal

[ tail out clicks due to amplitude at end of signal ]

(

(

(0,1),

(100,1),

((?signal Length,100)-,1),

(?signal Length,0)

)NumericShape,

>signal

)multiply !signal

}

)Choose Invoke

>signal

}!reverb-inner

{

?convol Length !grain-length

(>convol,?grain-length Silence)Concatenate FrequencyDomain !convol

(>signal,?grain-length Silence)Concatenate !signal

Bunch !out

(

(>signal,?grain-length)Granulate,

{

^signal ^time

((?reverb-inner Do,>time),>out)AddEnd !out

}

)InvokeAll

>out MixAt Normalise

}!reverb

{

(100,?frequency)SinWave MakeSawTooth !filter

(>filter,?length,1)ButterworthLowPass !filter

?length WhiteNoise !signal

((0,0),(?attack-a,0.25),(?attack-b, 1),((?length,0.8)*, 1),(?length,0))NumericShape !sinEnv

(>signal,?sinEnv)Multiply !signal

(>signal,>filter)Convolve Normalise !signal

(>signal,2000,5)ButterworthLowPass !signal

(>signal,?frequency,2)ButterworthHighPass !signal

(>signal,?frequency,1)ButterworthLowPass !signal

(((0,0),((?length,0.75)*,1),(?length,0.5))NumericShape,(?length,2.5)SinWave)Multiply !vvib

(1,>vvib pcnt+50)DirectMix !vvib

(>signal,?vvib)Multiply Saturate Normalise !signal

(>signal,0.6,0.5,?frequency Period)ResonantFilter Normalise !wave

(

-0.03,0.2,0,-1,0.2,2,

?wave

)WaveShaper Normalise !signal

(>signal,?sinEnv)Multiply !signal

((0,64),((?length,0.25)*,?frequency),(?length,64))NumericShape !lower

((0,?frequency),((?length,0.25)*,(?frequency,4)*),(?length,?frequency))NumericShape !upper

(>signal,>lower,>upper,2)ShapedButterworthBandPass Normalise !signal

(>signal,?volume)Volume

} !flute

{

((?attack,2)*,?pitch)ExactSinWave MakeSquare !hit

(?hit,(?pitch,2)*,4)ButterworthLowPass !hit

(

(0,1),

(45,0.5),

(75,0)

)NumericShape !hit-env

(

>hit,

>hit-env

)Multiply Normalise !hit

(>hit,?hit-strength)Volume !hit

(?attack,?decay,?sustain,?release)+ !length

(

(?length,?pitch)ExactSinWave dbs+3,

(?length,(?pitch,2)*)ExactSinWave dbs-12,

(?length,(?pitch,3)*)ExactSinWave dbs-18

)Mix !note

(

(0,0),

(?attack,1),

((?attack,?decay)+,0.5),

((?attack,?decay,?sustain)+,0.1),

(?length,0)

)NumericShape !note-env

(

>note,

?note-env

)Multiply Normalise !note

(

1,

0,

1,

1,

4,

10,

>note

) WaveShaper Normalise !note

(

0,

0,

1,

1,

4,

10,

>note

) WaveShaper Normalise !note

(>note,?pitch,1)ButterworthHighPass Normalise !note

(>note,1500,2)ButterworthLowPass Normalise !note

(

((0,5),((?attack,?decay,?sustain)+,1))Slide,

?note-env

)Multiply !twang

(?twang,?release silence)Concatenate !twang

( 1,>twang pcnt+20)DirectMix !twang

(

>twang,

>note

)Multiply !note

(

>hit dbs+6,

>note

)Mix !note

(?note,((0,1.001),(?note length,0.999))NumericShape)Resample !note-1

(?note,((0,0.999),(?note length,1.001))NumericShape)Resample !note+1

(>note,>note-1 pcnt-50,>note+1 pcnt+50)Mix Normalise !note

64 !base

(

(?note,110,2)ButterworthLowPass Normalise,

(

(-7,?base Period),

(-7,(?base,1.25)* Period),

(-7,(?base,1.50)* Period),

(-7,(?base,1.75)* Period)

),

-20

)MultipleResonantFilter Normalise !sounding-board

(>sounding-board,110,2)ButterworthLowPass Normalise !sounding-board

((0,0),(125,0),(500,1),((?note length,250)-,1),(?note length,0))NumericShape !damper

(>sounding-board,25,4)ButterworthHighPass !sounding-board

(

>sounding-board,

?damper

)Multiply Normalise !sounding-board

(>sounding-board,25,4)ButterworthHighPass !sounding-board

(

>sounding-board,

?damper

)Multiply Normalise !sounding-board

(>sounding-board,?pitch,1)ButterworthHighPass !sounding-board

(250,?sounding-board length,>sounding-board)Cut !sounding-board

(

>sounding-board,

>note

)Mix Normalise !note

(

(

250 Silence,

(?damper,?note)Multiply Saturate Normalise,

)Concatenate,

(

(-3,(?pitch,0.05)* Period),

(-6,(?pitch,0.2)* Period),

(-8,(?pitch,0.25)* Period),

(-8,(?pitch,0.333)* Period),

(-9,(?pitch,0.5)* Period),

(-6,(?pitch,1.5)* Period),

(-6,(?pitch,2.0)* Period)

),

-80

) MultipleResonantFilter Normalise !res

(0,?length,>res)Cut !res

(

0,

0,

1,

1,

4,

10,

>res

) WaveShaper Normalise !res

(

>note,

>res Normalise pcnt+15

)Mix !note

(?note,(?pitch,2)*,1)ButterworthLowPass Normalise !note

(>note,500 Silence)Concatenate !note

[ Mix in the characteristic white noise ]

(

(

1,

(

(

?note length WhiteNoise,

?pitch,

4

)ButterworthHighPass

,(?pitch,1.25)*

,4

)ButterworthLowPass Normalise pcnt+5

)DirectMix,

>note

)Multiply !note

(>note,15,2)ButterworthHighPass !note

(

(0,0),

(500,0),

(1000,1),

(?note length,1)

)NumericShape !note-env

(

>note-env,

?note

)Multiply !sample

(>sample,25,4)ButterworthHighPass !sample

((?sample Length WhiteNoise,500,6)ButterworthLowPass,0.01)DirectResample Normalise !multi

(>sample,(1,>multi)DirectMix)Multiply !sample

>sample !signal

[

(

(

((2000 WhiteNoise,2)Power,2000,1)ButterworthLowPass Normalise,

((0,0),(80,0),(90,1),(2000,0))NumericShape

)Multiply,

(

((5000 WhiteNoise,3)Power,1000,2)ButterworthLowPass Normalise,

((0,0),(125,0),(135,1),(5000,0))NumericShape

)Multiply

)Mix Normalise !convol

]

(

?reverb Do pcnt+50,

!sample

)Mix

((?sample Length WhiteNoise,500,6)ButterworthLowPass,0.01)DirectResample Normalise !multi

(>sample,(1,>multi dbs-6)DirectMix)Multiply !sample

(

0,

0,

1,

1,

4,

10,

>sample

) WaveShaper Normalise !sample

(

(((0,0.125),(4000,0.25),(?sample Length,1))NumericShape,>sample)Multiply,

>note

)Mix Normalise !note

(

((?note,1000,4)ButterworthHighPass,0.87)Power dbs-6,

>note

)Mix Normalise !note

( >note,?volume)NumericVolume

}!solar-piano

{

(?velocity,1.5)** !volume

(((?velocity,1)+,2)**,1)- !velocity

(

(?velocity,0.5)lt,

{

120 !attack

400 !decay

},{

50 !attack

400 !decay

}

)Choose Invoke

(?decay,(?length,4.5)/)+ !sustain

?length !release

?velocity !hit-strength

[bass boost]

(

(?pitch,220)lt,

{

(>hit-strength,0.5)+ !hit-strength

(?volume,0.33)+ !volume

},{

}

)Choose Invoke

(

"C",?count,

"P",?pitch,

"V",?volume,

"H",?hit-strength,

"A",?attack,

"D",?decay,

"S",?sustain,

"R",?release

)Println

?solar-piano Invoke !signal

>signal

}!play-solar

{

Bunch !notes

0 !count

0 !prev-high

(

?track,

{

^tickOn ^tickOff ^note ^key ^velocity

(

(?count,?notesToPlay)lt,

{

[ Set up the note ]

(?tickOn,?beat)* !at

((?tickOff,?tickOn)-,?beat)* !length

(Semitone,?key)** !multi

(?baseSound,>multi)* !pitch

[(>pitch,2)/ !pitch]

[(>pitch,6)/ !pitch]

(>velocity,100)/ !velocity

(>length,1.5)* !length

[ Play the note ]

((?play-solar Do,?at),>notes)AddEnd !notes

},{

}

)Choose Invoke

(>count,1)+ !count

}

)InvokeAll

>notes MixAt Normalise

}!play

"C0" Note !baseSound

8.00 !beat

2000 !shortCut

9999 !notesToPlay

"temp/ml1.mid" ReadMidiFile

^t1

^t2

?t2 !track

"temp/Solar-Piano-Internal.wav" Readfile ^cLeft ^cRight

>cLeft !convol

?play Do !left

>cRight !convol

?play Do !right

[

Post

===

]

"Post Processing" PrintLn

((?left,?right),"temp/moon-mix.wav")WriteFile32

"temp/moon-impulse-b.wav" ReadFile ^revl ^revr

"temp/moon-mix.wav" ReadFile ^left ^right

?left !signal >revl !convol ?reverb Do Normalise !wleft

?right !signal >revr !convol ?reverb Do Normalise !wright

(

>wleft,

>left

)Mix Normalise !left

(

>wright,

>right

)Mix Normalise !right

((>left,>right),"temp/moon-post-b.wav")WriteFile32